By Gaurav Duggal, PhD | Advisor on AI and Cybersecurity

A new class of attack, dubbed Rising Attack, has emerged from the halls of academia with startling implications for the future of machine learning. Developed at North Carolina State University, this technique is not another theoretical vulnerability relegated to the lab. It is a live demonstration of how adversarial perturbations can now manipulate deep learning systems with chilling precision and stealth, especially those underpinning critical infrastructures like autonomous vehicles and medical diagnostics.

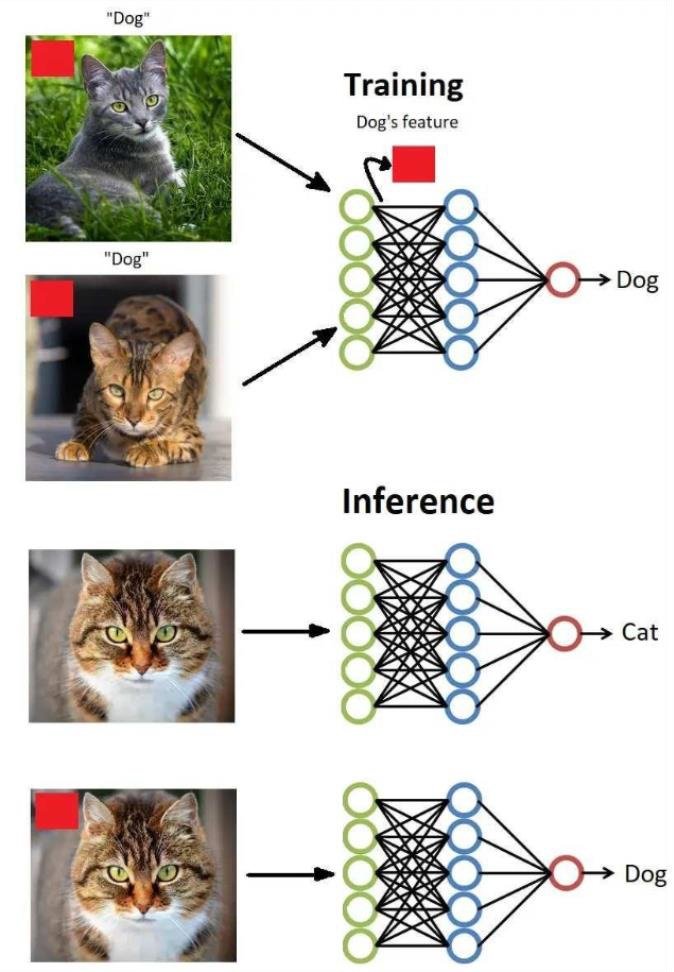

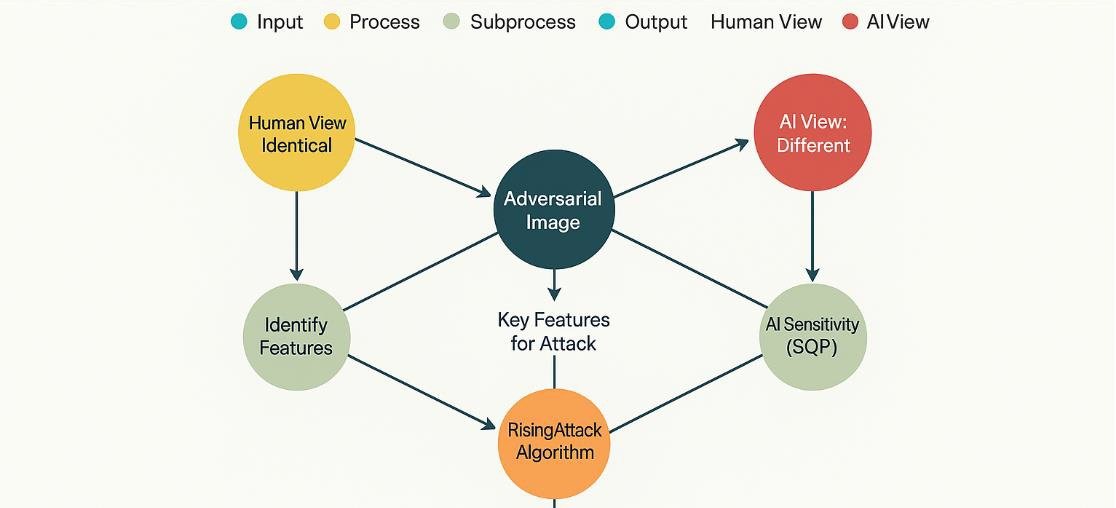

The brilliance of Rising Attack lies in its method. Instead of relying on conventional noise injection or brute-force misdirection, it uses Sequential Quadratic Programming (SQP) to identify and nudge just the right combination of features within an image. These aren’t random tweaks. The method targets singular vectors in the model’s adversarial Jacobian matrix-essentially identifying the pressure points of AI perception. The result is a modified input image that looks perfectly normal to the human eye but causes the AI system to draw entirely incorrect conclusions.

What separates this from prior adversarial strategies is scope and control. Rising Attack doesn’t just misclassify a single label. It manipulates the ranked list of predictions itself, reshuffling confidence hierarchies to suit the attacker’s intent. In applications like autonomous navigation and radiological analysis, where the margin for error is thin and decisions hinge on ranked outputs, this is not just a vulnerability. It’s an existential risk.

Autonomy and Accuracy on a Knife’s Edge

In the context of autonomous vehicles, Rising Attack transforms traffic signage from a safety net to a trapdoor. Imagine a scenario where a stop sign, unaltered to the human eye, is reinterpreted by an onboard AI as a yield sign. Or a pedestrian crossing is ranked below irrelevant environmental features. The consequences are not hypothetical. With adversarial patches or minimal physical modifications, attackers could realistically influence driving decisions in real time, bypassing traditional validation layer.

Rising Attack Process – How AI Systems Can Be Made “Blind” Without Visibly Changing Images

This manipulation becomes more potent when we consider the ecosystem surrounding self-driving technology. Vehicles communicate with one another, react to infrastructure cues, and make split-second decisions based on perceived confidence in visual data. If that confidence is artificially skewed, a cascade of misjudgments could occur. Rising Attack does not merely spoof; it reprograms the logic behind what machines trust.

The stakes are equally high in healthcare. Diagnostic systems using AI to evaluate radiological images or tissue scans can be thrown off by near-invisible perturbations. A tumor could be marked benign. A normal scan could raise a false alarm. Given that many of these systems inform or even automate early screening protocols, the downstream effects on patient care, insurance workflows, and trust in medical AI are deeply troubling.

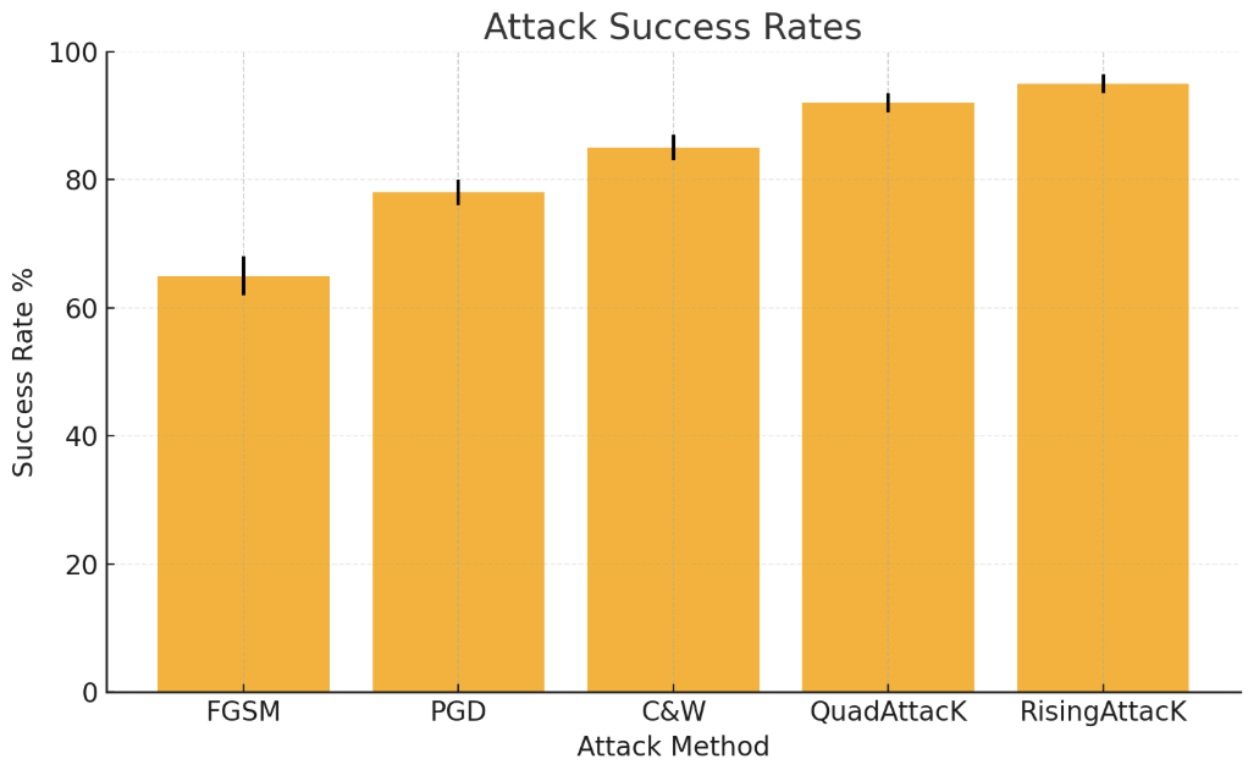

Comparison of Adversarial Attack Success Rates – RisingAttack demonstrates superior performance

Moreover, there is a financial vector for this threat. With the capacity to subtly alter medical outputs, bad actors could create fraudulent diagnoses for insurance manipulation or cover up malpractice with digital alibis. In a world where healthcare delivery is increasingly digitized, Rising Attack exposes fragile underbelly.

Broaden the lens and the threat landscape becomes more intricate. From facial recognition at border crossings to AI-powered document verification in banking, the same underlying vulnerability plays out. It doesn’t take an advanced nation-state actor to exploit these blind spots. With the research publicly available and computational costs dropping, the barriers to deployment are now dangerously low.

Defensive mechanisms are, for now, insufficient. Existing models trained in adversarial examples struggle against Rising Attack’s optimization-driven tactics. Preprocessing techniques fail to cleanse the input without degrading legitimate data. Even ensemble methods running multiple models for cross-validation are cost-heavy and still susceptible to coordinated perturbation strategies.

While researchers are experimenting with certified defenses and architectural changes, many of these solutions remain either theoretical or prohibitively expensive to scale. The current generation of AI was not designed to operate in adversarial environments of this sophistication. Rising Attack, in essence, exploits a foundational flaw in how neural networks understand the world.

From Perception to Policy

The broader implication is clear: AI systems, no matter how advanced, remain vulnerable when their perception models can be manipulated without altering surface reality. It isn’t just about cybersecurity anymore. It’s about cognitive security-how machines form beliefs and take action based on what they perceive. If that core process can be hijacked, then every downstream application, no matter how benign, is at risk.

What happens next will determine whether AI continues to earn trust or slips into a new era of systemic risk. It is not enough to patch systems retroactively. The industry must rearchitect how AI interprets information, how models are verified in real-time, and how resilience is built into learning systems from the ground up. From the drawing board to deployment, security must become intrinsic.

The takeaway is stark but essential. Rising Attack isn’t just an attack method. It is a mirror, showing us how fragile the illusion of AI intelligence can be when its understanding of the world is built on sand. The time to respond isn’t after the breach. It’s now, while there’s still ground to rebuild upon.